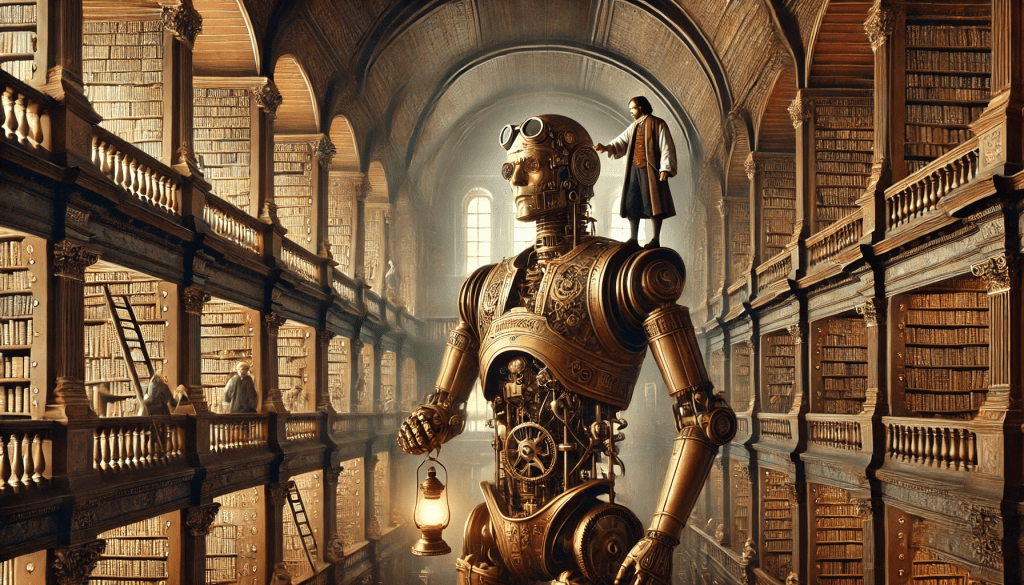

WTF is ChatGPT? Not an (autonomous) agent but a library-that-talks, and is changing your life

- Barandiaran, X. E., & Almendros, L. S. (2024). Transforming Agency. On the mode of existence of Large Language Models (arXiv:2407.10735). arXiv. http://arxiv.org/abs/2407.10735

I have been working with Lola Almendros for almost two years on this paper. It has taken looong to finish. But it is now available as a preprint. Here is a quote of one of the central ideas:

ChatGPT operates as a gigantic (…) library-that-talks, enabling a dialogical engagement with the vast corpus of human knowledge and cultural heritage it has ‘internalized’ (compressed on its transformer multidimensional spaces) and that it is capable of recruiting effectively in linguistic exchange. The machine’s interlocution, though devoid of personal intentionality, bears the trace of human experience as transposed into digitalized textuality. The purpose-structured and bounded automatic interlocution, however, can be experienced as a genuine dialogue by the human subject.

Generative technologies, and more specifically, Large Language Models (like ChatGPT, Gemini, Mixtral, Llama, or Claude) are rapidly expanding and populating our everyday toolbox and interaction space. If philosophy is understood as the practice of crafting (new) concepts to (better) organize our life… we have some job to do! Many conceptualize LLMs as mere “dumb statistical engines”, others as “sentient persons” … But what are they exactly? We know they are capable of surpassing existing intelligence benchmarks while, at the same time, failing to solve some kinds of simple puzzles.

Inspired on Simondon’s insights that part of our alienation regarding technology has to do with our lack of understanding of what technical object are and how they work, this paper deepens into the architecture, processing and systemic couplings under which LLMs (like ChatGPT) operate. We contrast this operational structure with those of living agents to conclude that LLMs fail to meet the requirements that characterized them. If not as agents, then… how to categorize LLMs? Here is one proposal:

[M]ore than a self-bootstrapped Artificial Intelligence, ChatGPT, as an interlocutor automaton, is a computational proxy of the human collective intelligence externalized into a digitalized written body. It is, in turn, shaped and taken care of by hundreds of human and non-human lives. […] This happens not just at a contextual level or as an operational environment, but at a constitutive level. No LLM is an island. And their performative power, and derived agentive capacities (if any), inherently rest on human and planetary scale life.

Moreover:

LLMs display capacities that effectively mobilize human intelligence as embodied in massive textuality, affectively mobilize human intelligence in conversation, and can activate forms of hybrid agency previously unavailable for human intelligence.

And yet, the scale of LLM operations is immense and beyond explainable human capacity to fully conceptualized. For example, GPT-3 was trained on 570 GB of text data, equivalent to around 2 million books, which would take a human over 500 years to read. Moreover, processing tasks performed by LLMs involve computational operations on a scale that would take a human expert millions of years to replicate if carried out step-by-step. We conclude:

By a digitality that deep, it is reasonable to hold that the boundary between invention and discovery, between artifact and nature, between engineering and science is somewhat blurred. We have built LLMs as much as we have discovered their emergent capabilities.

This paper is a preliminary (ontological) analysis of LLMs and transformer technologies and their coupling with human agency. Our goal is to address the deep technopolitical challenges and opportunities that this type of devices (and their social-ecological support networks) have opened.

- Barandiaran, X. E., & Almendros, L. S. (2024). Transforming Agency. On the mode of existence of Large Language Models (arXiv:2407.10735). arXiv. http://arxiv.org/abs/2407.10735

ABSTRACT: This paper investigates the ontological characterization of Large Language Models (LLMs) like ChatGPT. Between inflationary and deflationary accounts, we pay special attention to their status as agents. This requires explaining in detail the architecture, processing, and training procedures that enable LLMs to display their capacities, and the extensions used to turn LLMs into agent-like systems. After a systematic analysis we conclude that a LLM fails to meet necessary and sufficient conditions for autonomous agency in the light of embodied theories of mind: the individuality condition (it is not the product of its own activity, it is not even directly affected by it), the normativity condition (it does not generate its own norms or goals), and, partially the interactional asymmetry condition (it is not the origin and sustained source of its interaction with the environment). If not agents, then … what are LLMs? We argue that ChatGPT should be characterized as an interlocutor or linguistic automaton, a library-that-talks, devoid of (autonomous) agency, but capable to engage performatively on non-purposeful yet purpose-structured and purpose-bounded tasks. When interacting with humans, a «ghostly» component of the human-machine interaction makes it possible to enact genuine conversational experiences with LLMs. Despite their lack of sensorimotor and biological embodiment, LLMs textual embodiment (the training corpus) and resource-hungry computational embodiment, significantly transform existing forms of human agency. Beyond assisted and extended agency, the LLM-human coupling can produce midtended forms of agency, closer to the production of intentional agency than to the extended instrumentality of any previous technologies.

[…] Barandiaran, X. E., & Almendros, L. S. (2024). Transforming Agency. On the mode of existence of Large Language Models (arXiv:2407.10735). arXiv. Submitted to Minds and Machines. https://xabier.barandiaran.net/2024/07/22/transforming-agency […]